Watch video tutorial

If you'd like to learn more about Power BI performance optimization, enter your data below to get immediate access to the full 1-hour video recording with Zebra BI's CEO & Founder Andrej Lapajne.

September 8th

September 8th February 22nd

February 22nd

We've all seen slow Power BI reports. You know, reports that just take forever to load the visual. We're talking 5, 20 seconds or even a minute and more which makes them completely useless. And that's just why Power BI performance optimization is extremely important!

Here's the thing...

Speed, and thus performance, has a huge impact on user experience. Let's face it, you don't have time to waste on waiting for your reports to load, no one does. And that's just where this guide comes in! Read on to learn how to make your reports run up to 10x faster in a way that is simple and easy to follow.

Get these tips in the form of a PDF checklist: Power BI Performance Optimization Checklist [PDF].

Power BI performance optimization is a complex topic, so we'll try to make it as practical as possible. To this end, we'll cover it in three simple steps:

Whoever said Power BI performance optimization can't be fun? Let's dive right in.

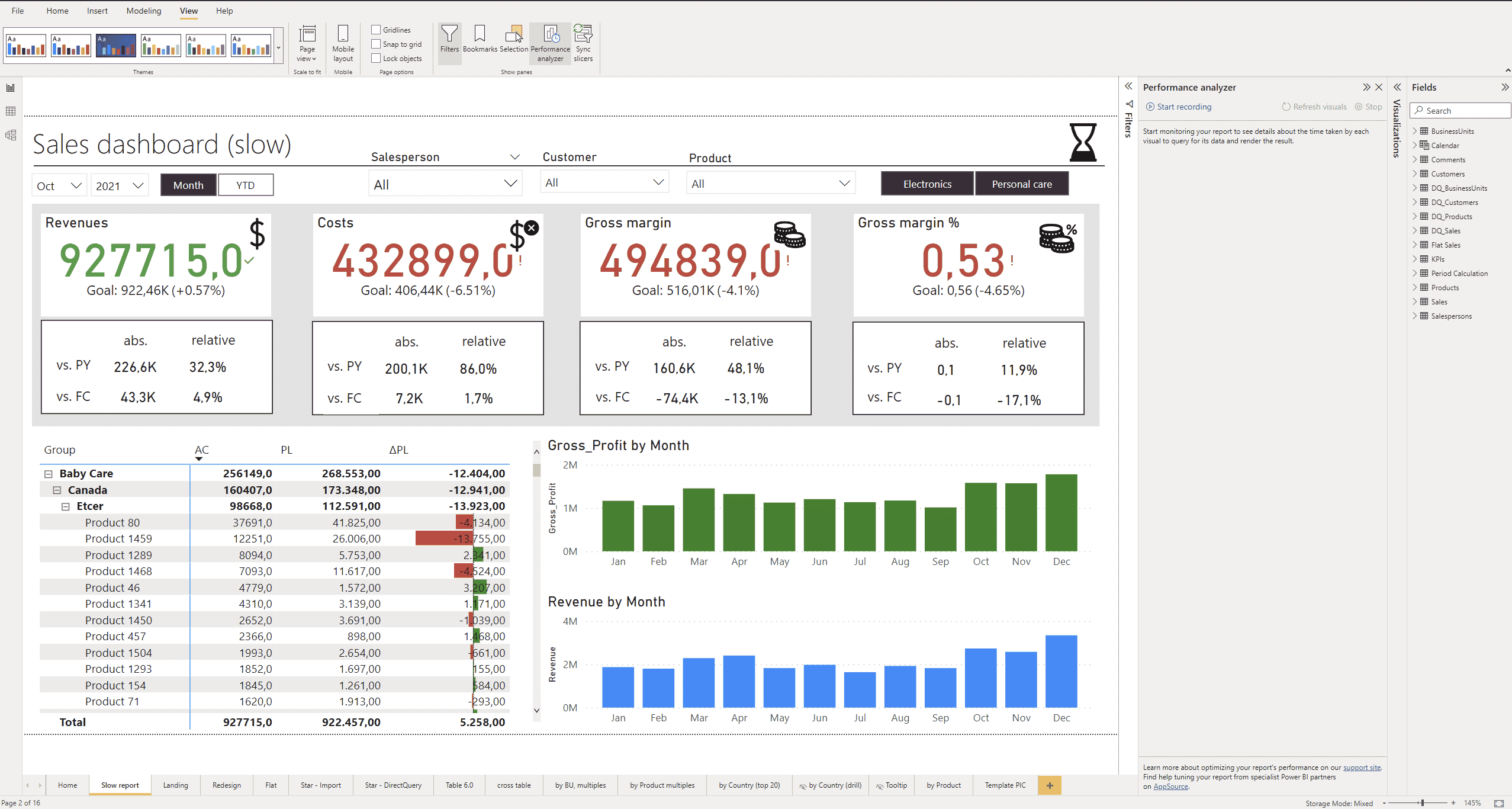

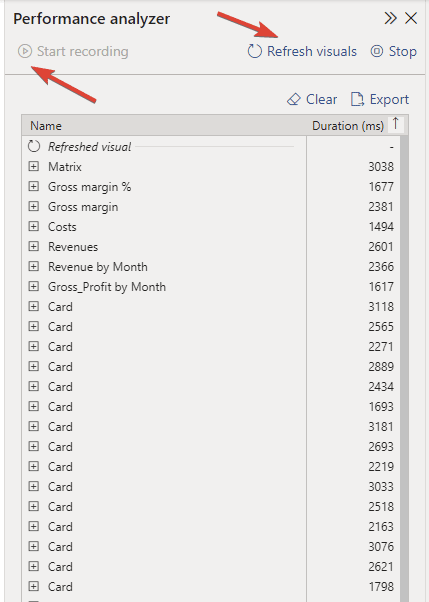

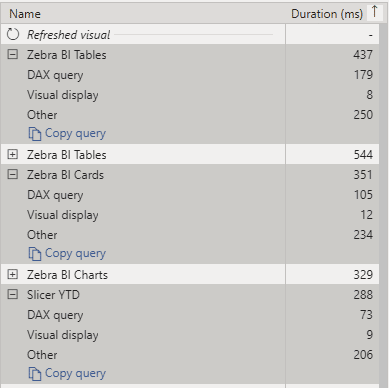

The natural starting point is Power BI Performance Analyzer, a built-in feature of Power BI Desktop. Select the View ribbon, and then select Performance Analyzer to display the Performance Analyzer pane.

In the Performance Analyzer pane, hit Start recording and then Refresh visuals. Power BI reloads visuals and records how long each of them takes to load.

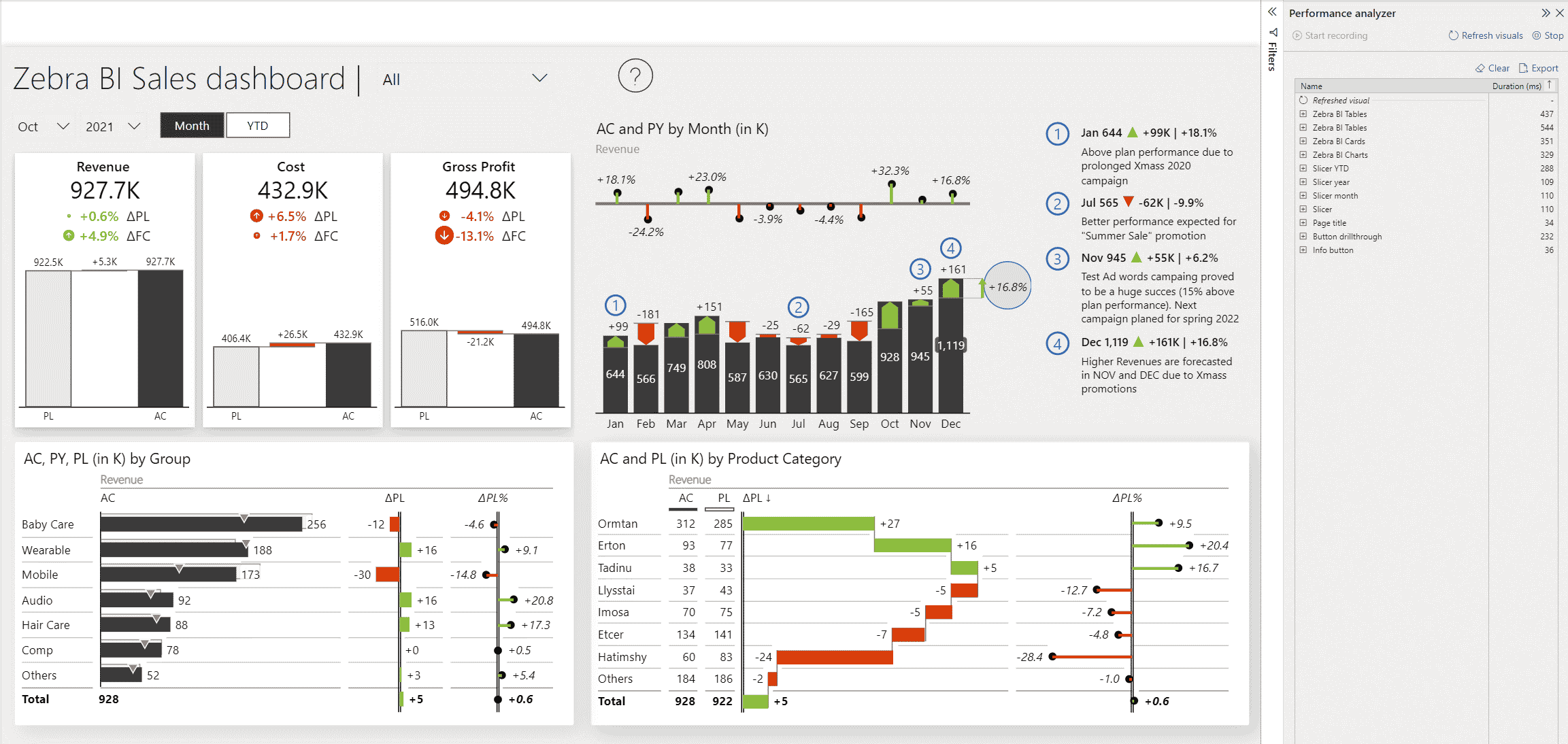

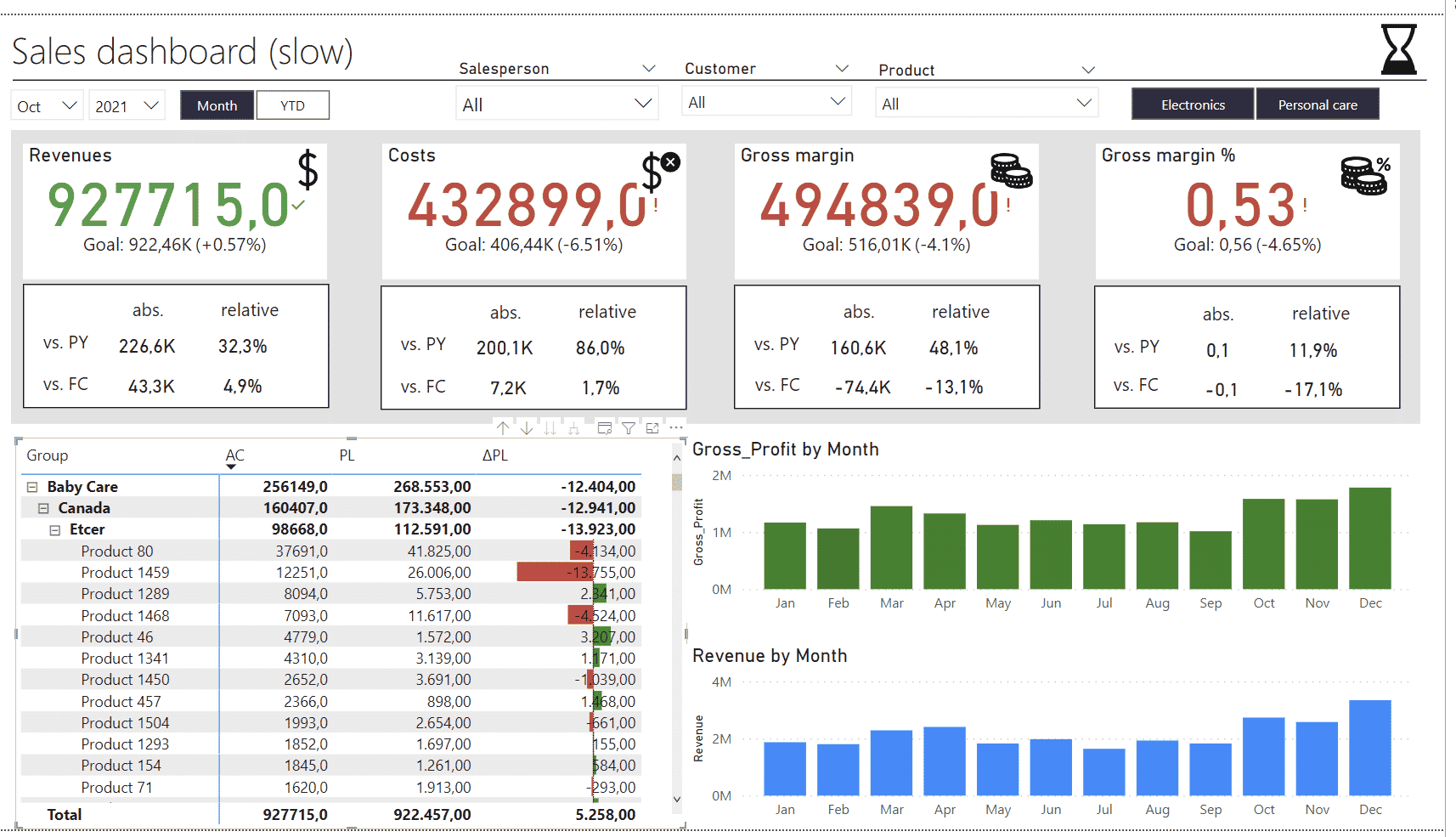

This report has a large number of elements, including images, lines, cards, charts, tables and slicers. Each of these elements adds to the time needed to query the data, and load and display the visual. What's important here is the duration of each element, displayed in milliseconds.

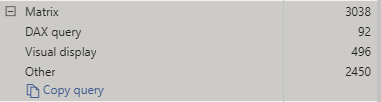

Let's take a look at what makes up the load time of one randomly selected element.

DAX query turns out to be quite fast, with the duration of just 92 milliseconds. The Visual display category reveals that it took almost 500 milliseconds for the visual to display, which is worrying. What took the longest, however, was the Other category. This one indicates how long it took for the visuals to load before the matrix visual could, which lets us know that there are simply too many visuals on the page.

But what exactly can you do about it, you may ask?

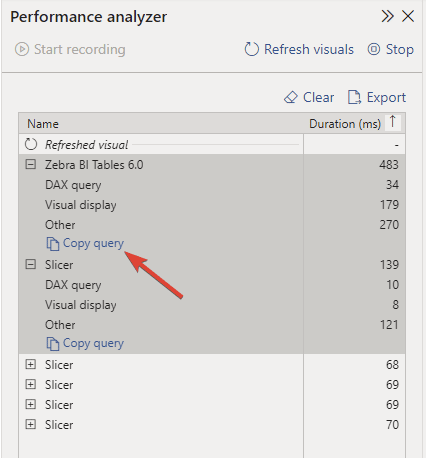

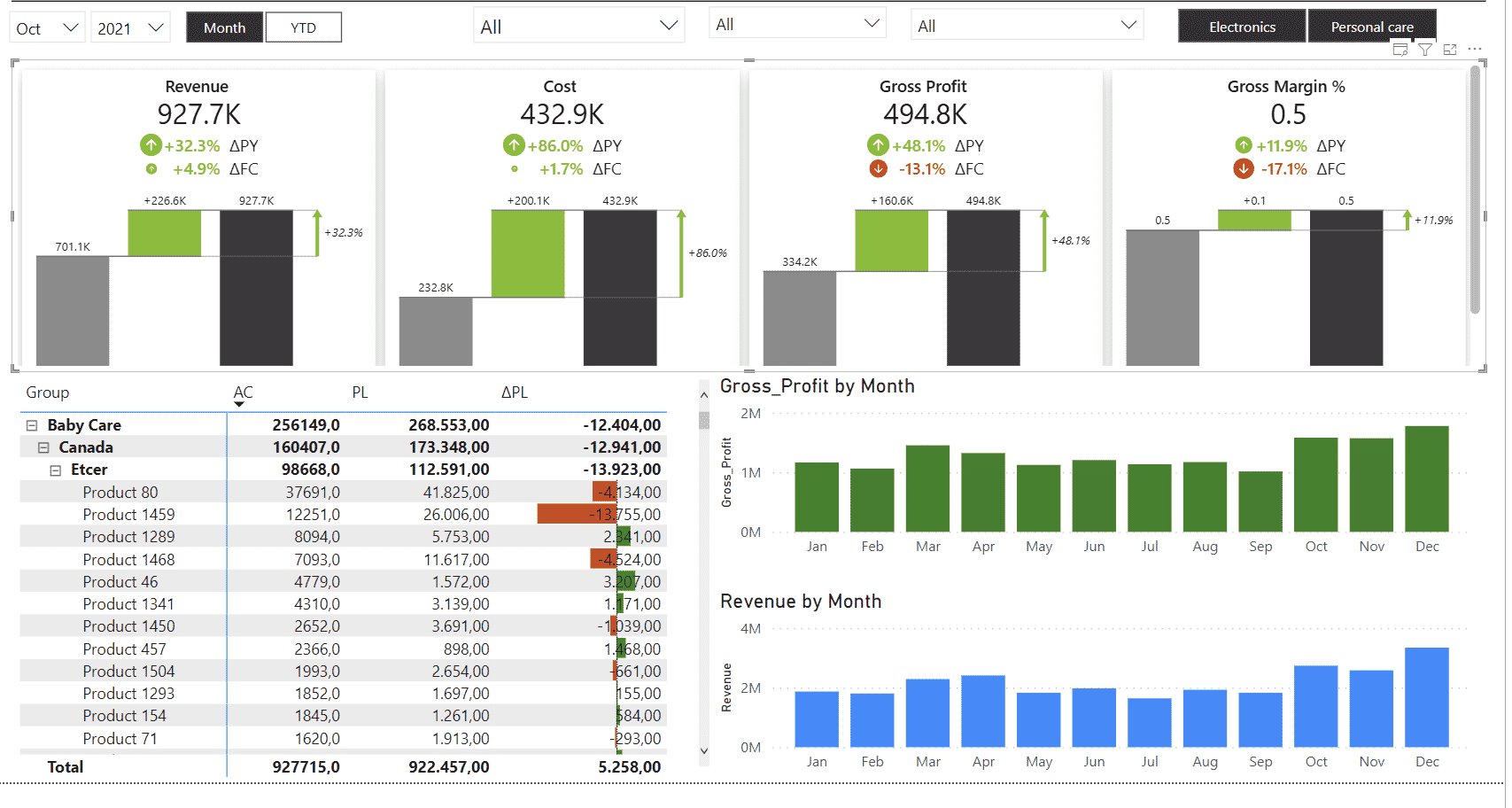

To put things into perspective, let's take a look at a Zebra BI landing page report and run another performance analysis here. To create a similar report using Zebra BI visuals, start your free trial today.

The performance report shows that this one is much faster – and here's why...

There are far fewer visuals on the page.

Essentially, we have four slicers, four content visuals, and three additional elements. That's pretty easy to manage from a performance standpoint. And even though there are fewer visuals, that doesn't mean there's less information available. After all, you can display multiple charts and much more information in every single visual.

Let's take a closer look at the report results.

Here, a DAX query took 105 seconds while the visual display only took 12 seconds. That's great! While the visual still had to wait 205 milliseconds for other elements to load, that's not a bad score.

There's another situation that can slow down your report. You might be creating tables that use DirectQuery as the connection mode. This means that the data is not loaded into Power BI. Instead, it is loaded using a query that runs on a database server. This means additional time to fetch the data.

This shows up as another item on the report, since Power BI first needs to fetch the data, process it using a PowerQuery and pass it on to a DAX command.

Yet another thing that can slow down your reports is row-level security. To check for how it affects your visuals, you can open the Modelling tab, click View as and then select the role you want to analyze. You will see that the performance may change and can review what is happening.

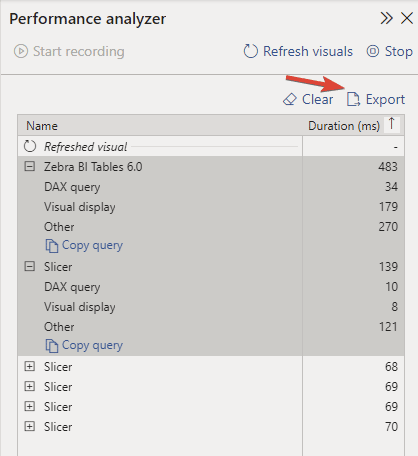

Sometimes you might want to analyze a specific DAX query in more detail. Click on the Copy query command in your Performance analyzer pane.

You can then analyze it in another tool called DAX Studio. This is a a great tool for performance analysis. It shows you the results and history of queries as well as server timings, allowing you to really get into details. If you do have a problem on the database side, then this is the right tool to do it.

Another thing you could do is export Performance analyzer report as a JSON file. It provides very detailed information on when each query started, how long each event took and so on. For basic analysis, however, the core data from Performance analyzer is detailed enough.

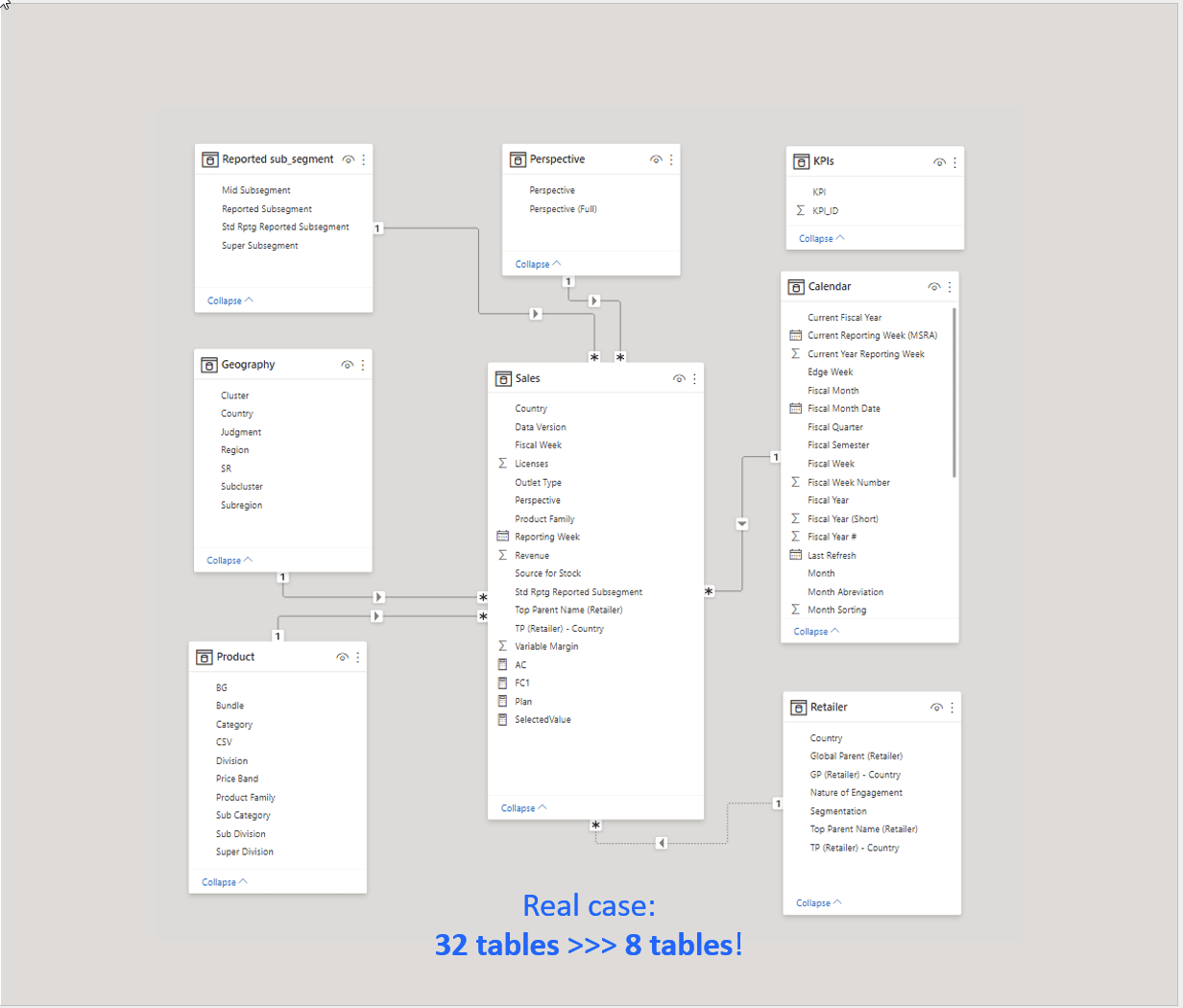

Let's start by saying that 80% of performance issues are caused by the data model. The logical data model is the number one reason for performance issues. Here is an example of a data model that will cause problems for sure.

What is it that makes your data model messy and causes it to decrease your performance? It's things like many joins, relations, bridge tables, and so on. To make it actually work, you normally need complex DAX formulas with 10 or 20 lines. A good rule of thumb is that long DAX formulas are an indication of a poor data model.

Another issue is often caused by many-to-many relationships and unused columns. Always look at whether you are actually using them. If you're not, don't include them. You can always add more data fields and columns later on. Power BI is very flexible in this regard, so you don't have to worry about it breaking your existing reports.

But what does a lean data model look like, you may ask? Take a look at the example below.

This data model actually delivers the same functionality as the previous one. However, it is created using a simple star schema and leverages only the necessary columns. The DAX formulas we used are very simple. As a result, we've managed to reduce the number of tables in the model from 32 to 8 and make it 5 to 10 times faster for typical data queries.

There are a couple of reasons why these overly complex data models keep popping up. The first is the lack of proper data preparation. People essentially just load all the tables into Power BI and start creating relationships. Then, they need to write complex DAX formulas to tie everything together. To make everything run faster, however, you need to combine tables and merge them before you even load them into Power BI.

Separated fact tables are another big issue. You might be working with a table with actual sales data and another one with plan data. People often load both of them separately and figure out the relationship between them in Power BI. For best results, these also need to be combined before loading into your data model.

Other major mistakes include using look-up tables instead of proper hierarchies and dimensions and including every possible column into it, because, hey, somebody might need it sometime.

As mentioned above, using row-level security can also be a performance killer.

So what can you do to make everything run faster?

Here are the steps you need to take to prepare your data:

Typically, you should do this in PowerQuery or on your data source (such as SQL server) and not in DAX. In the DAX model, you do add relationships, you use DAX for your KPIs and certain additional features, such as the calculation of certain data categories.

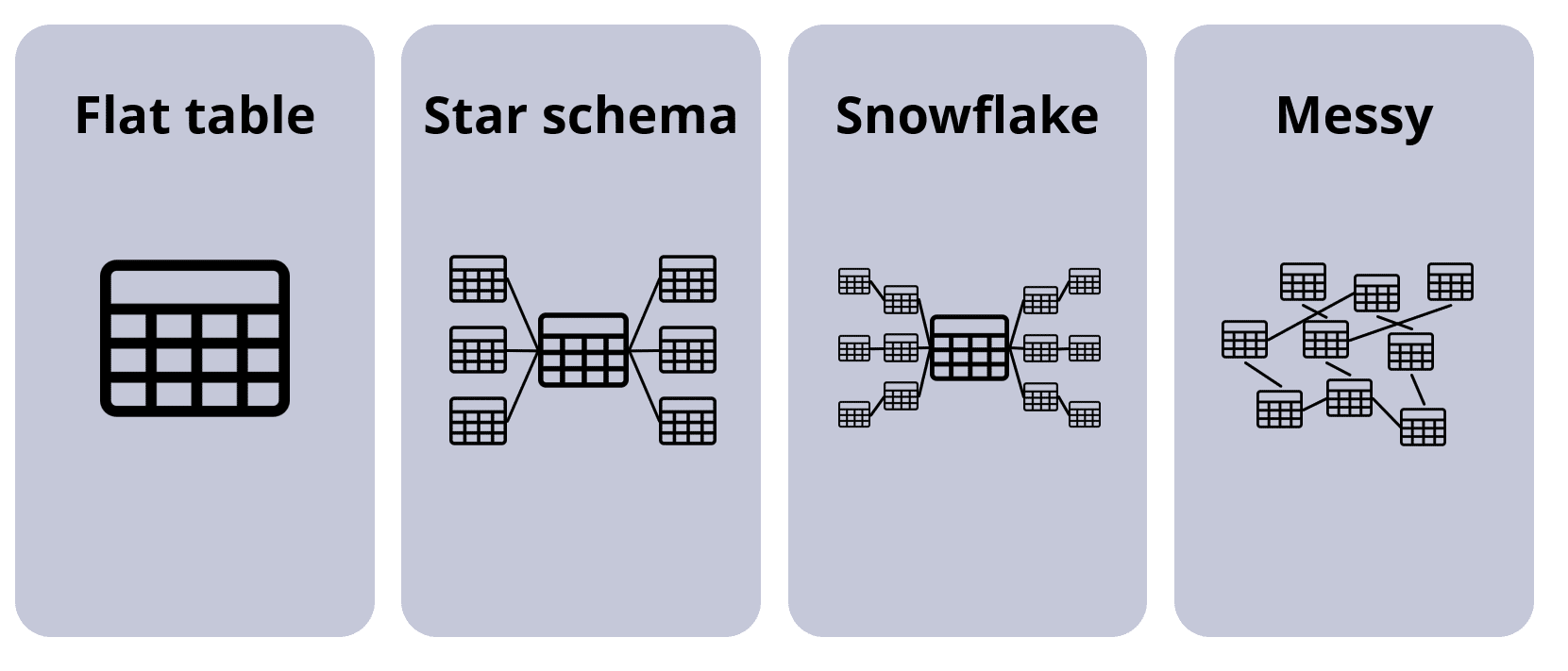

In theory, there are four data model types in Power BI.

The bottom line is that star schema is the most appropriate data model. If you have a flat table, you need to normalize it by separating the dimensions into a star schema. If you have a snowflake schema, merge and combine the tables for dimensions to arrive at the star schema.

Why is choosing the right model so important?

Technical tweaks of visuals can only get you so far. You can expect a 5% improvement. But the factor that impacts your performance the most is a logical data model and the way your data is organized.

Here are some rules that will help you make this data model run really fast.

In dimension tables, hide the foreign keys. All other values will be text. Also, make sure to remove any unnecessary columns to keep everything lean and clean.

When it comes to the Calendar dimension, use Date or DateID, not the DateTime data type. The latter is basically a timestamp with the number of milliseconds and seconds, which is why it's far too detailed.

Avoid calculated columns. You don't normally need the calculated columns and should just use measures. If you need something special, you can do the calculation in PowerQuery and generate the needed column as a normal column in a dimension.

The final tip is to remove the auto-date table in Power BI.

There are many calculations that you can do in DAX. For example, calendar dimension calculations are something we've done in our tutorials and webinars. If you're experiencing performance issues, however, you'll want to move these things down the BI stack.

That means that you are not calculating these things every time a report is loaded or refreshed. Instead, calculations are done at the level of PowerQuery or your data source. Data is calculated before you import it into your Power BI report.

Also, you should always use simple DAX formulas, typically for non-additive measures.

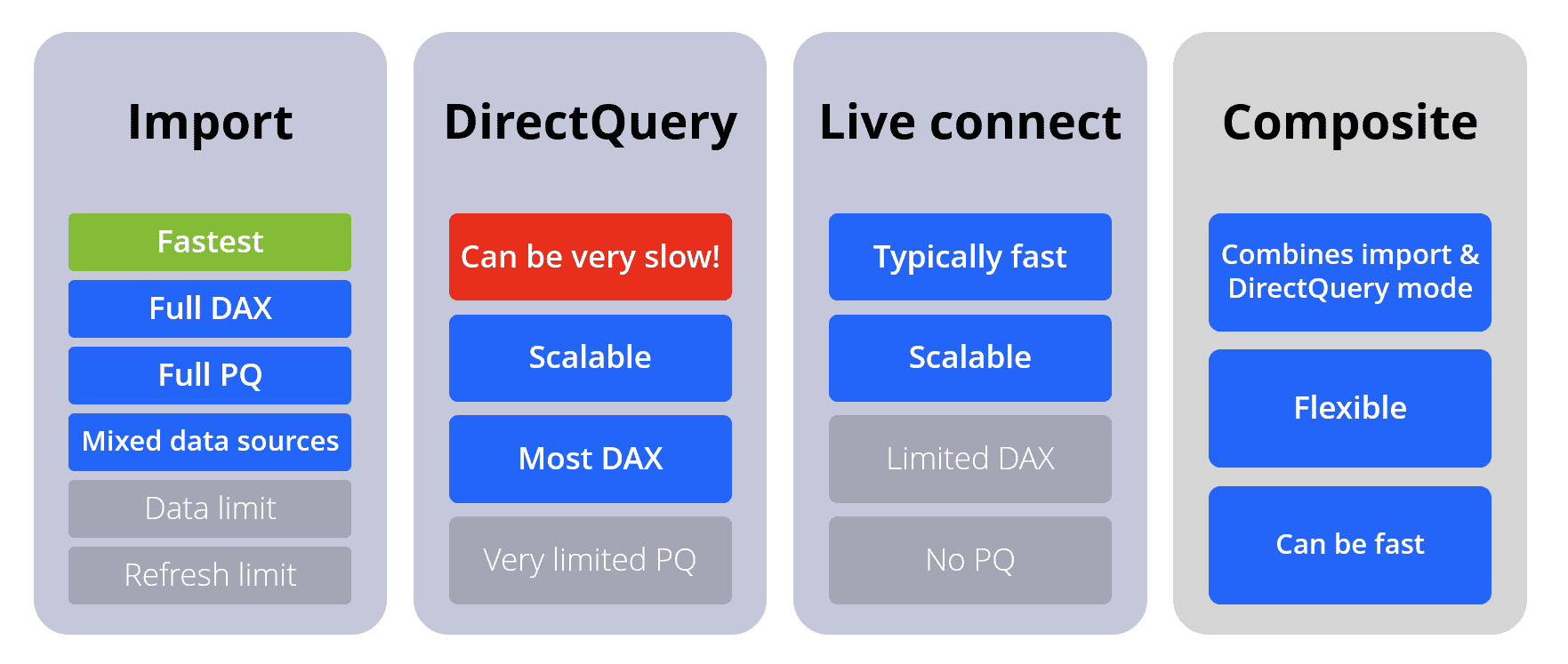

You have several data connection methods in Power BI, each with its own benefits and drawbacks.

The fastest method is Import mode. Essentially, this mode allows you to load the data once into Power BI, where it is then stored. Power BI uses the in-memory VertiPaq engine, which is exceptionally fast and delivers results almost immediately. It offers full DAX and PowerQuery support. The drawback is that it only permits you to refresh data eight times per day.

To sidestep this issue, more demanding customers often switch to DirectQuery, which can be slow. You need to do your Power BI performance optimization at the source, which means you probably need a database administrator. And you will need to do these optimizations if you want a responsive report.

The third method is Live Connection. You use it to connect to Azure Analysis Services or Azure Synapse. These databases are very similar to those used by Power BI, which delivers great performance. The drawbacks are limited DAX support and no PowerQuery functionalities.

The final method is the composite model. This is the recommended option if you need to use DirectQuery, because it compensates for some drawbacks.

Last but not least, avoid row-level security, which can be a performance killer. If you need it, make sure you are working with a clean star schema model. Try to edit it with simple rules and apply it to dimensions only.

Also, consider alternatives. When you are using simple rules, one option is to split your model into two separate reports and two separate datasets for different audiences. In this case, you don't need row-level security and both reports will work faster just because you split them into several parts.

There is one thing that pops up almost always when we are seeing performance issues. It's putting too many elements into a report. Let's take another look at the report we analyzed at the beginning.

In our test, this report takes 5 seconds to load and you want to have it under 1 second. This is an extreme case, where every single part of the four card-type visuals is a separate element. Each individual part is its own visual. That makes it really slow.

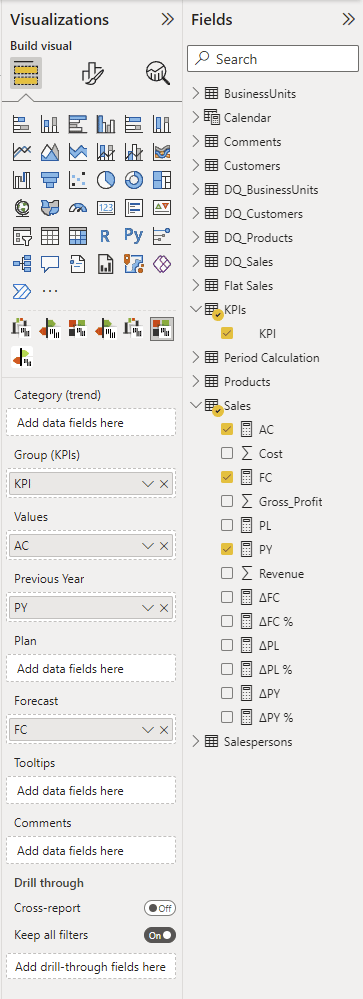

We'll do a quick redesign and start by deleting the four groups of elements and replacing them with a Zebra BI Cards visual.

Once we've done that, our performance analysis has shown that the load time has gone down to 700 milliseconds.

Now, we add the Zebra BI Cards custom visual and add it to our report. You then need to add individual measures (actuals, previous year and forecast) and group them by KPIs.

We've replaced the complex group of visuals with a single clean visual that conveys the same information and still keeps the load time under one second.

We call this approach consolidating your visuals. In practice, this means that we are working with fewer visual but are not making any compromises when it comes to information. The same information is still there, it's just presented better.

Presenting your data in a multi-card type visuals is the best practice for this case.

Another powerful tool for consolidating your visuals is cross tables. Instead of having multiple tables, you can have a single table to display the relationships between two or more groups.

In the example above, you have a regular table with basic measures that then includes a period calculation to display more information. Since all of this is included in a single visual, it is still very fast.

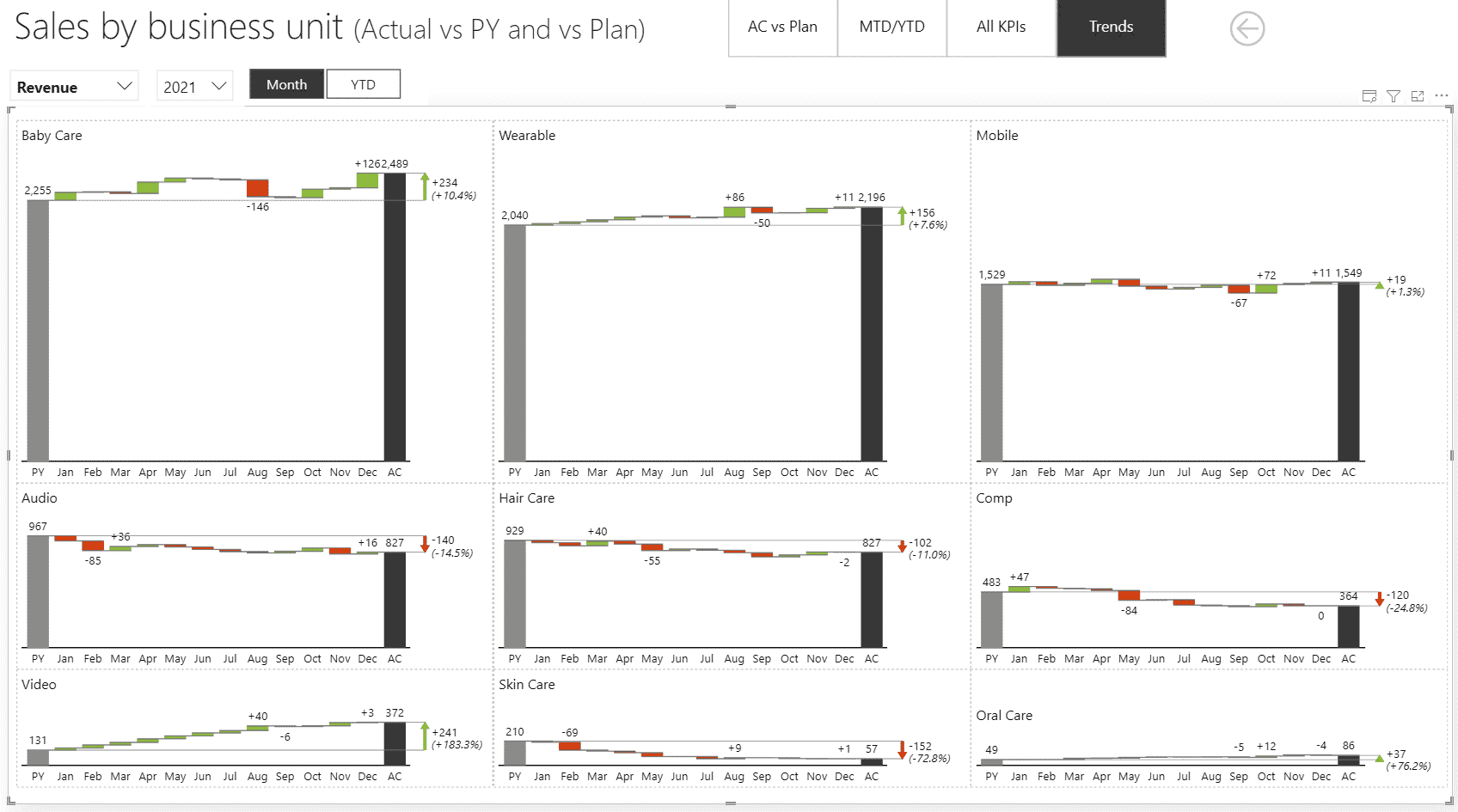

The last helpful tool when it comes to selecting visuals is small multiples. This is another brilliant tool for improving the performance of your reports. For example, we've created an example report page with 9 charts for each individual group. It only took 33 milliseconds to render.

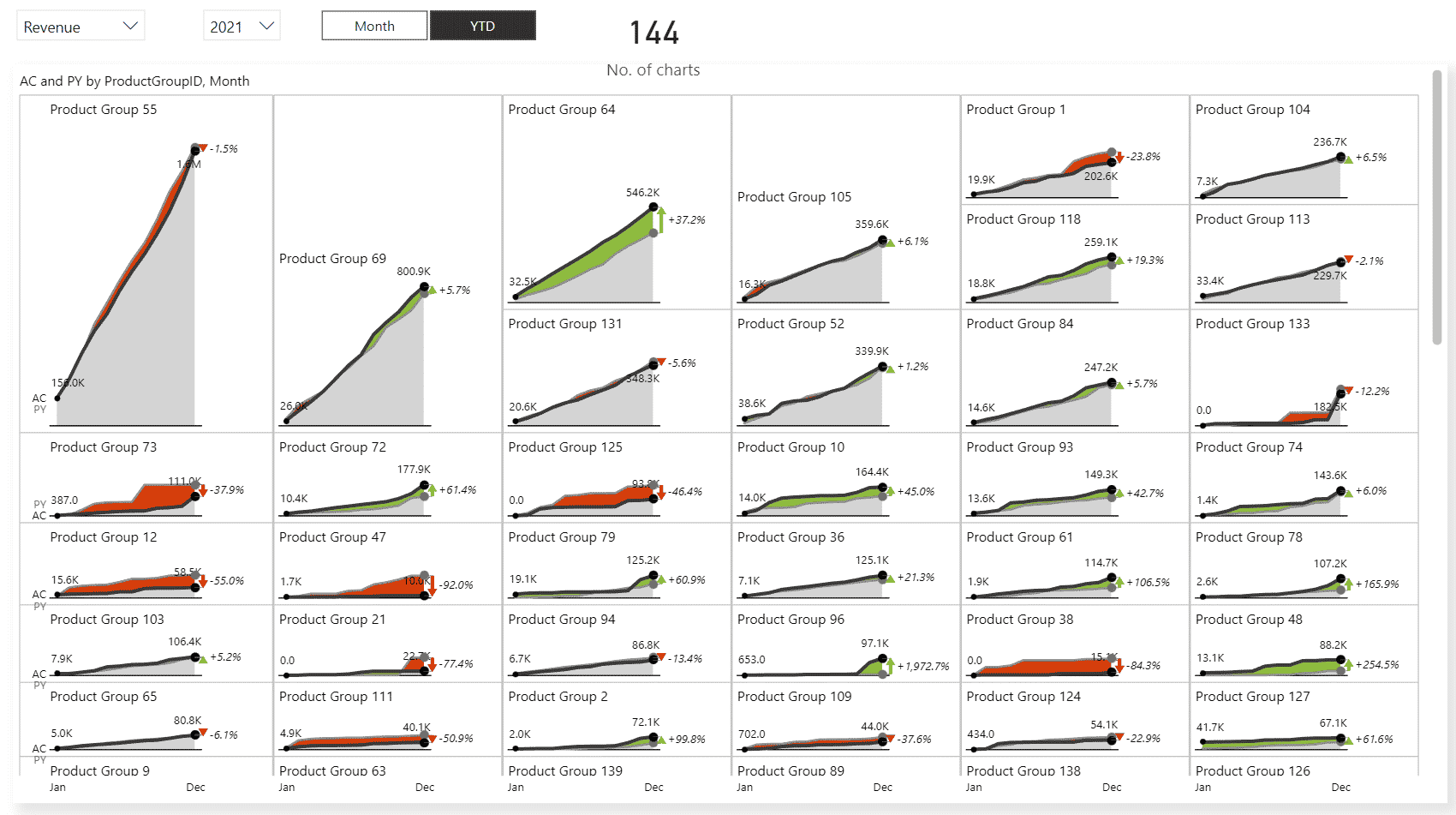

This report is running in Import data mode and is ridiculously fast. Of course, adding more charts will slow down the performance somewhat. As a test, we've created a report with 144 charts – one for each individual product.

The results even surprised us. The render time was still under half a second.

As you consolidate your visuals, make sure that you start with a landing page that runs fast and will not take more than half a second to load. To do this, keep only the very good visuals without a lot of elements that still include variances and other key information.

Why does this page need to be so fast?

People will open it, it'll load, they will see things that interest them and they will click on them. They can click on specific elements to drill through to another report page and discover more information. Once they are interested in what they see, they're prepared to wait a bit longer. But the starting point needs to be extremely fast.

Speed is crucial to maintaining a great user experience. As we've seen, there are several ways of speeding up your reports and achieveing maximum Power BI performance optimization, but the best and most efficient way is to focus on your data model. This will deliver truly impressive results and is the only way to really bring the load times down to a fraction of a second.

To achieve this, start by looking at the performance of your dashboards as a project. Take a look at what it is that is slowing them down and what the main culprits are. Next, reevaluate your data model. It's only after that that you are ready to start tweaking your visuals, consolidating and streamlining them.

To fully experience all the features demonstrated in this video tutorial, please start your free trial of Zebra BI for Power BI beforehand.